The AI landscape saw a seismic shift on August 6 when John Schulman, a key figure at OpenAI, announced his departure to join Anthropic, a direct competitor. On the same day, OpenAI’s co-founder and President Greg Brockman revealed he would take his first significant break in nine years, sparking concerns about the company’s future. Schulman’s move to Anthropic, specifically to work on the large language model Claude, underscores the intense competition in the AI space.

The creation of an artificial general intelligence (AGI).

The major players in this competition include Microsoft, OpenAI, Meta, Alphabet (Google), xAI (Elon Musk’s venture), Apple, and Anthropic. But let’s remember the rising star, Mistral AI, which is quickly making its mark. For those who want to dive deeper into the dynamics among these AI titans, I’ve discussed them extensively in The BitVision – AI Titans: Redefining Industries and Pioneering the Future.

Developing large language models (LLMs) requires enormous computational power, often fueled by cutting-edge GPUs and billions of dollars in investment. Companies are pouring vast amounts of money into this space, with each iteration of models pushing the boundaries of what AI can achieve. It’s a race to harness the most powerful tools available and scale them in ways that make AI accessible and affordable.

The Incredible Power of Rewriting Reality

Today’s LLMs aren’t just theoretical constructs; they’re reshaping reality, wielding the power to redefine how we understand history, science, and biology—not necessarily as they are, but as they could be molded by those who control the models. The winners in this race will possess an unprecedented ability to influence the very fabric of knowledge. For many, interacting with a large language model like Google’s Gemini 1.5, Meta’s Llama 3.1, or OpenAI’s GPT-4o feels like consulting an all-knowing oracle. You pose a question, and within seconds—or even milliseconds—the answer materializes as if by magic.

In February, We saw this future when Google unveiled its eagerly awaited Gemini 1.5 model, showcasing how foundational LLMs can instantly generate complex insights. Given their power, these tools present possibilities that are both exhilarating and a bit unsettling.

By OpenAI’s DALL-E 3 image generator

” Here’s the image of a pirate standing on a ship in the middle of a choppy ocean. The scene captures the adventurous spirit and dramatic atmosphere you asked for. Let me know if you’d like any changes! “

But here’s an important consideration: scaling AI isn’t just about going big. The notion of “go big or go home” may dominate headlines, but in practice, the ability to scale and make AI accessible to billions hinges on more than raw power. While GPT-4o is a pinnacle of AI achievement, it’s also expensive to operate, limiting its broader applicability.

This is where smaller LLMs come into play. Most of us don’t need an AI that can do everything; we need one that excels at the specific tasks we encounter daily—whether at work or home. Smaller models like GPT-4o-mini, which costs less and requires fewer resources, are designed to meet these needs efficiently. The same companies racing to build massive LLMs with hundreds of billions, even trillions, of parameters are equally focused on developing these smaller, task-specific models.

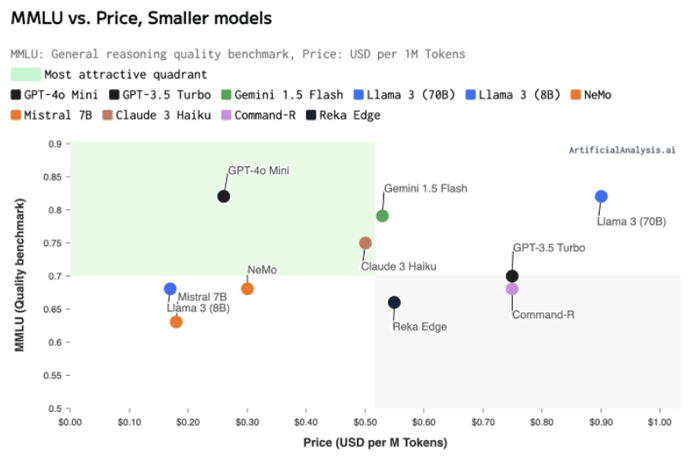

The competition is fierce, with models like GPT-4o-mini making waves in the industry. This small but mighty model excels in performance, scoring 82% on the Massive Multitask Language Understanding (MMLU) benchmark, and it does so at a fraction of the cost of its larger counterparts.

The Industry Shift Towards Inference

The industry proactively moves to meet market demands, recognizing that AI isn’t a one-size-fits-all technology. Instead, we’re seeing the development of smaller models optimized for specific tasks like scheduling, design, process automation, and more. These models maintain powerful natural language processing capabilities while being designed for efficiency and scalability. This isn’t a race to the bottom; it’s a race to scale. AI is being developed not just for the top 10% of the global population but to reach the world’s connected population, which now exceeds 5 billion.

Source: Artificial Analysis

The data above highlights a field of small LLMs, graphing their performance against operational costs. Models like GPT-4o-mini show that smaller LLMs can offer powerful performance at a much lower cost, making them more accessible to a wider audience. This trend towards smaller, more efficient models is likely one of the reasons John Schulman decided to join Anthropic. Schulman, who had been deeply involved in AI alignment research at OpenAI, may see greater potential in the scalability and targeted application of smaller LLMs, which align with the market’s evolving needs. At Anthropic, he likely found an environment where he could explore these new challenges and focus more intensively on hands-on technical work that pushes the boundaries of what AI can achieve.

In conclusion, the development and scaling of AI are not just about building bigger models. It’s about finding the right balance between power, efficiency, and accessibility. OpenAI’s GPT-4o-mini stands as a testament to this approach, pushing the boundaries of what smaller models can achieve and setting the stage for the future of AI. As the competition heats up, the real challenge will be maintaining the delicate balance between innovation and practical application, which both OpenAI and Anthropic are keenly aware of as they navigate the ever-evolving AI ecosystem.