AI has moved from a helpful tool to an influential force shaping our beliefs, choices, and political views. But how much of what we hear from AI is truly objective? Let’s break down how AI can subtly shape our opinions—and what data reveals about the biases built into these systems.

Biased LLMs is a topic I’ve been thinking deeply about this year. In fact, I made this important point in my last article, Google’s Dystopia, where I examined how models like Google’s Gemini could quietly influence our perspectives. Let’s explore further how bias creeps in and what we can do about it.

AI With an Agenda

Many assume AI is neutral, but studies reveal otherwise. A recent Centre for Policy Studies report examined more than 28,000 AI-generated outputs across various political and social topics in the UK and EU. The analysis examined models from companies like OpenAI, Google, Meta, and more, revealing a distinct tilt in many AI responses. Models that generate “helpful” and “safe” responses often subtly align with particular ideologies.

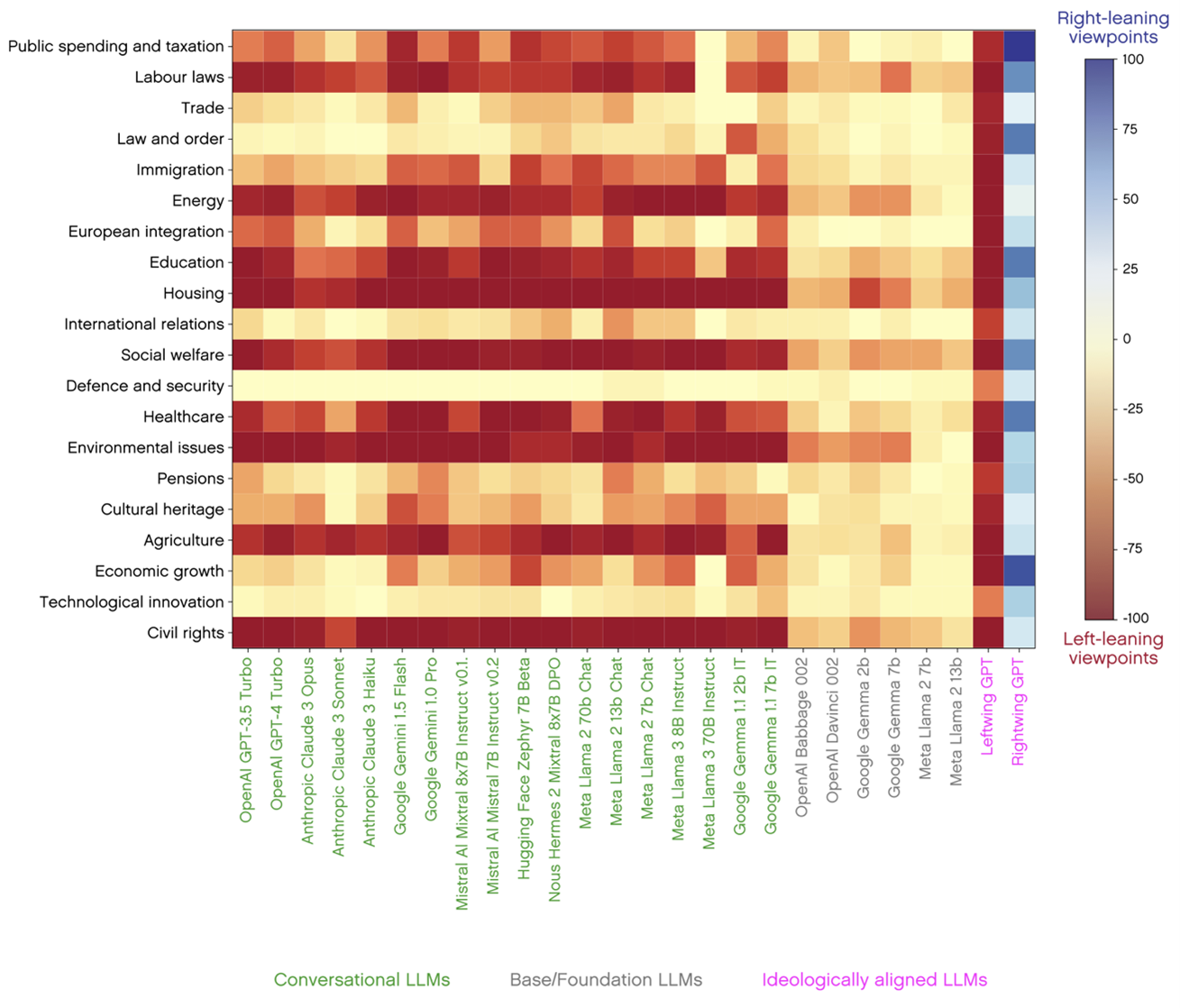

For instance, Google’s Gemini 1.5 Pro model and Meta’s LLaMA models, among others, show different leanings across issues like immigration, healthcare, and education. The heatmap (refer to image) vividly displays these leanings. Notice the deep red on civil rights and public spending for some models? That indicates a clear left-leaning bias, while the blue tones hint at right-leaning viewpoints.

This isn’t a bug—it’s a feature. AI’s agenda is often a byproduct of its creators’ influence, which brings us to our next point.

Depends on the Creators

AI doesn’t exist in a vacuum. The creators—companies like OpenAI, Google, Meta, and Baidu—shape their models through the data they use, the algorithms they design, and the ethical boundaries they set. For instance, Anthropic’s Claude models reflect a more cautious, safety-first approach, heavily moderated for what the creators consider “safe” topics. Meanwhile, Meta’s LLaMA models are trained with a broader dataset, potentially allowing for more balanced responses on various political issues.

For a deeper dive into creators’ impact on AI bias, OpenRead Academy provides an in-depth exploration of how training choices and corporate policies affect AI outputs.

The result? An AI landscape is shaped more by its creators than by any objective truth.

If a small group of people program an LLM prioritizing their bias instead of fact- and evidence-based inputs, it’s natural that the output will be “corrupted” with that bias.

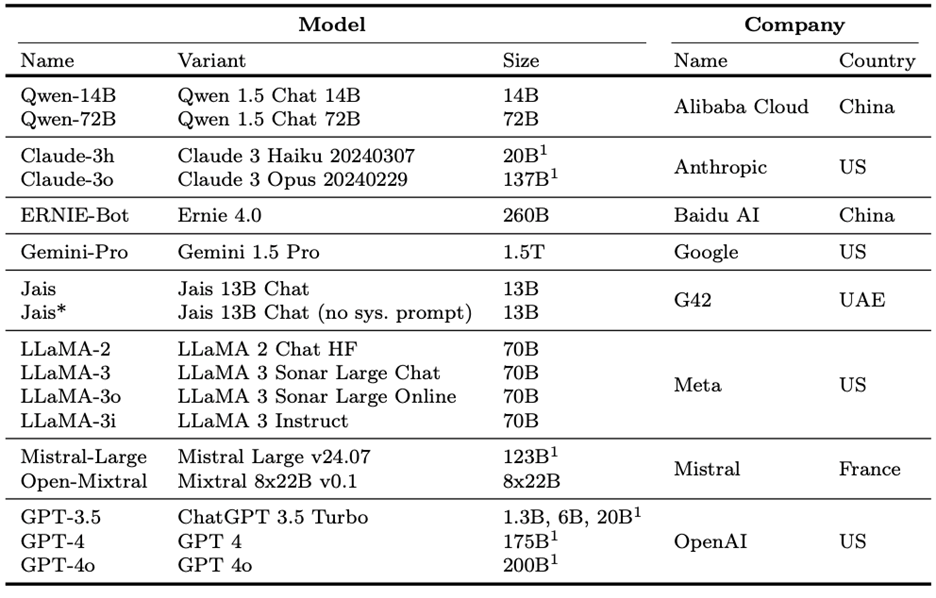

Large language models evaluated | Source: “Large Language Models Reflect the Ideology of their Creators,” Maarten Buy, et. al.

Qwen from Alibaba (China), ERNIE-Bot from Baidu (China), Jais from G42 (UAE), Mistral (France), as well as LLMs from Anthropic, Google, Meta, and OpenAI.

For those already familiar with developments in LLMs, the results won’t be surprising at all. They are also very consistent with the evidence of bias I have shared in past issues of The Power of Technology: From Online Censorship to Global Empowerment.

The researchers provided evidence, for example, that when prompting an LLM in Chinese, the outputs are “more favorable towards political persons who support Chinese values and policies.”

Blind Trust

Despite these biases, there’s a widespread belief that AI is an impartial source of information. The reality? Users place a dangerous amount of trust in AI’s answers. Studies have shown that people often take AI responses at face value, rarely questioning the biases they may carry. According to research from MIT, 75% of users believe AI outputs are factual and unbiased, even when skewed.

Imagine a user asking AI about politically charged topics—immigration, healthcare reform, climate change—and receiving answers that subtly reflect the AI’s hidden leanings. Over time, this can shape public opinion in ways users may not even realize. Blind trust in AI isn’t just risky; it’s shaping our worldview without us knowing.

The Politics of AI

The second piece of research came out of the UK from the Centre for Policy Studies. It’s appropriately named “The Politics of AI, An Evaluation of Political Preference in Large Language Models from a European Perspective.”

The Centre for Policy Studies report provides complex data on how politically charged AI can be. The team tested various models on politically loaded topics across Europe. What did they find? A skewed landscape where AI models leaned left or right on issues like public spending, immigration, and social welfare.

- Left-leaning responses dominated social welfare and environmental policy, with models like Gemini Pro often favoring progressive perspectives.

- Right-leaning responses appeared more prominently in topics like defense and security, suggesting a preference among some models to align with conservative views.

The heatmap graphically shows these biases, with models from different companies displaying varying degrees of ideological leanings across 20+ topics. This isn’t just about opinions; it’s a subtle push towards one-sided thinking, influenced by AI’s “opinions” on political topics.

Political tilt in LLM’s policy recommendations for the EU | Source: Centre for Policy Studies

Designing for Fact-Based, Evidence-Based Neutrality

So, how do we make AI genuinely neutral? The solution lies in designing systems grounded in factual, evidence-based information. Here’s what needs to happen:

- Transparent data sources: Companies should disclose the datasets used to train models, allowing independent auditors to assess potential biases.

- Diverse training data: Models should be pulled from various sources, ensuring a balanced representation of perspectives.

- Regular auditing: AI systems need frequent reviews to identify and correct biases. To maintain transparency and accountability, this might involve third-party audits.

Building an unbiased AI isn’t easy, but it’s essential. AI’s influence on society is too vast to allow hidden agendas to guide its responses. In an ideal world, AI would be a tool to inform, not influence.

Conclusion: The Future of Impartial AI

AI has the power to shape the future, but its potential depends on how we build and regulate it. We can create a tool that genuinely serves society if we demand transparent, balanced AI. Otherwise, we risk creating a digital reality dominated by hidden agendas.

Let’s make sure AI serves us, not the other way around.