What’s the first thing that comes to mind when you hear “chatbot” in the U.S.?

Yep, ChatGPT.

That’s what market dominance looks like. OpenAI got there first, and now most people think of ChatGPT before anything else—even though Google’s Gemini, Anthropic’s Claude, Meta’s Llama, and xAI’s Grok all compete at the same level. First-mover advantage is real.

But in France, they don’t call it ChatGPT.

They call it Le Chat.

Mistral’s Big Play for Europe

Paris-based Mistral is Europe’s boldest attempt to challenge U.S. dominance in AI. Founded in 2023, the company has raised $1.2 billion in less than two years—tiny compared to OpenAI or Google, but enough to make waves.

Mistral’s flagship product, Le Chat, is a generative AI assistant designed for life and work. It does everything its U.S. counterparts do, with one big difference: it’s open-source.

While OpenAI, Google, and Anthropic lock their models behind corporate firewalls, Mistral lets users access its software code, model weights, and training data. Businesses and developers can fine-tune Le Chat for specific tasks, making it more adaptable than closed-source models.

Le Chat is also rapidly gaining traction. When its mobile version launched, it quickly topped the App Store’s productivity rankings, briefly surpassing ChatGPT and DeepSeek. This indicates a strong demand for AI models that combine high performance with open accessibility.

But here’s where it gets exciting.

The World’s Fastest AI Assistant

Speed matters in AI. The faster a model can infer (generate answers in real time), the more useful it is. That’s where Le Chat shines.

Mistral’s latest announcement reveals that Le Chat is the fastest AI assistant in the world. It outperforms competitors by a massive margin, thanks to one critical piece of technology: Cerebras’ semiconductor chips.

Cerebras: The Secret Weapon Behind Le Chat

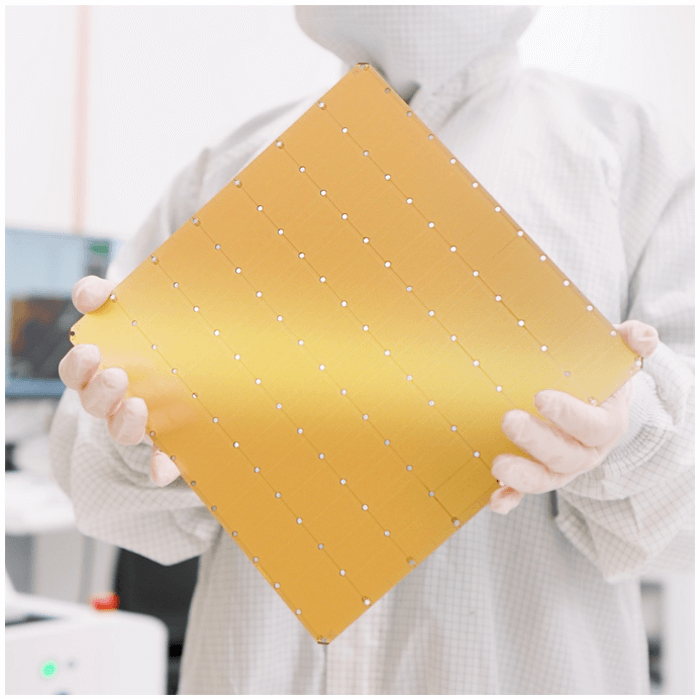

If you haven’t heard of Cerebras, you will soon. This private semiconductor company has built a game-changing AI chip: the Wafer Scale Engine 3 (WSE-3). Unlike traditional AI chips, which require multiple GPUs working together, Cerebras built one massive chip to handle inference workloads at an entirely new scale.

Souce: Cerebras

The result? Blazing-fast AI performance.

Check out these numbers:

- Le Chat (on Cerebras): 1.3 seconds

- Anthropic’s Claude: 19 seconds

- OpenAI’s GPT-4o: 46 seconds

That’s not a tiny difference. That’s an order of magnitude faster.

Inference speed is crucial because it dictates how human-like an AI feels. The faster it responds, the more seamless the experience. And right now, Le Chat is setting the new standard.

Source: Cerebras

The AI Shift: From Training to Inference

For the last two years, AI companies have been in an arms race to build the most significant and smartest models. But there’s a shift happening. Now that models like GPT-4, Claude, and Gemini have reached high levels of intelligence, the real battle is moving toward inference efficiency.

In simple terms:

- Training = Learning new skills (expensive, slow, high energy consumption)

- Inference = Applying what’s learned in real-time (critical for user experience, cheaper if optimized)

Companies are now spending more on inference infrastructure than training. Amazon alone is forecasting $100 billion in AI infrastructure investment in 2025. The demand for real-time AI responses is exploding.

Mistral is ahead of the curve in this transition. It integrates two cutting-edge AI models: Mistral Large for text operations and Pixtral Large for multimodal tasks like image recognition and document analysis. This combination enhances Le Chat’s ability to perform various functions, from creative writing to complex data processing. More AI topics in my previous article in here.

AI Spending: The Billion-Dollar Avalanche

Despite concerns about AI costs, companies aren’t slowing down. Investment is accelerating.

- OpenAI is closing a $40 billion funding round, doubling its valuation from $157 billion to $300 billion in four months.

- Amazon is pouring $100 billion into AI infrastructure.

- Palantir (PLTR) is now valued at $256 billion, with forecasted 2025 revenue of just $3.7 billion—trading at 69 times revenue.

These numbers aren’t just enormous. They’re insane. Yet, investors are still piling in because revenue growth outpaces even the most aggressive forecasts.

Four months ago, OpenAI projected revenue of $11.7 billion for 2025. Now, analysts are predicting it could hit $30 billion, which would make its $300 billion valuation seem almost… reasonable.

Meanwhile, Europe is stepping up its AI game. The OpenEuroLLM alliance, which includes over 20 leading European research institutes and computing centers, is pushing for AI development free from U.S. and Chinese dominance. France recently showcased its AI ambitions at the Artificial Intelligence Summit, with Mistral and Le Chat taking center stage. This signals that Europe is no longer just a spectator in the AI race.

The Bottom Line: AI’s Next Chapter

Le Chat is proving that AI dominance isn’t just about who builds the most innovative models—it’s about who makes them fast, efficient, and accessible.

Mistral and Cerebras are showing that hardware and software innovation go hand in hand. OpenAI may still be the king, but new challengers are rewriting the rules of the game.

And if history has taught us anything, it’s this: Being first doesn’t always mean staying on top.